cURL for scraper/network/web developers

I’ll show some hidden gems useful for Network Engineers, Proxy users, and WEB devs in the most populat console HTTP client.

Intro

For me, cURL remains a clear and simple Swiss Army knife after years of use. I hope you find it and the command line interface useful and convenient. I'll share features and use cases that you might miss if you're new to the tool.

1. Proxy testing

You can test that the Proxy URL(both HTTP Connect and Socks protocols) is working

- The server socket is reachable

- The IP addr that you got differs from the IP visible for direct connection

- IP addr belongs to the GEO location you have chosen

- The proxy session works properly

# Oriignal ip_addr and GEO

$ curl https://api.myip.com

{"ip":"86.13.22.62","country":"United Kingdom","cc":"GB"}

# Error from proxy server - wrong auth

$ curl https://api.myip.com -x "iddqd:wifi;gb;;@proxy.soax.com:9016"

curl: (56) CONNECT tunnel failed, response 422

# Valiad auth data, ip_addr differes from original one and country code meat expectations.

$ curl https://api.myip.com -x "deadbeef:wifi;nl;;@proxy.soax.com:9016"

{"ip":"31.201.172.9","country":"Netherlands","cc":"NL"}

# Two sequential calls returns the same ip_addr - means session works and it’s sticky(we avoided IP autorotation)

$ curl https://api.myip.com -x "http://package-1234567-sessionid-giveusatank:deadbeef@proxy.soax.com:5000"

{"ip":"105.69.79.213","country":"Morocco","cc":"MA"}

$ curl https://api.myip.com -x "http://package-1234567-sessionid-giveusatank:deadbeef@proxy.soax.com:5000"

{"ip":"105.69.79.213","country":"Morocco","cc":"MA"}

# Add sessionlength in HTTP login filed or wait for defulat 180s and try again.

# ip_addr differs - mean we can control session duration(for how long we will have the same ip_addr)

$ curl https://api.myip.com -x "http://package-1234567-sessionid-giveusatank-sessionlength-180:deadbeef@proxy.soax.com:5000"

{"ip":"105.69.79.213","country":"Morocco","cc":"MA"}

2. HTTP performance test

Simle perfromance test can be done for target HTTP service or target + proxy. You will find out what the latency is and what it consists of.

# Create file with custom output definition

$ cat curl-format.txt

\n

time_namelookup: %{time_namelookup}\n

time_connect: %{time_connect}\n

time_appconnect: %{time_appconnect}\n

time_pretransfer: %{time_pretransfer}\n

time_redirect: %{time_redirect}\n

time_starttransfer: %{time_starttransfer}\n

----------\n

time_total: %{time_total}\n

# Request w/o mobdifications

$ curl -s -w "@curl-format.txt" https://httpbin.org/delay/0

{

"origin": "185.102.11.225"

}

time_namelookup: 0.001717

time_connect: 0.114930

time_appconnect: 0.347608

time_pretransfer: 0.347990

time_redirect: 0.000000

time_starttransfer: 0.461777

----------

time_total: 0.461838

# This is how 3 seconds server side delay looks.

$ curl -s -w "@curl-format.txt" https://httpbin.org/delay/3

{

"origin": "185.102.11.225"

}

time_namelookup: 0.001993

time_connect: 0.118725

time_appconnect: 0.361365

time_pretransfer: 0.361727

time_redirect: 0.000000

time_starttransfer: 3.480000

----------

time_total: 3.480220

# This is how slow connect from the distant country looks

$ curl -w "@curl-format.txt" https://httpbin.org/delay/0 -x "http://package-1234567-country-za:deadbeed@proxy.soax.com:5000"

time_namelookup: 0.001413

time_connect: 0.041881

time_appconnect: 1.494866

time_pretransfer: 1.495128

time_redirect: 0.000000

time_starttransfer: 2.201573

----------

time_total: 2.201681

3. cURLlib

Curl command line tool and curllib are so popular, that you can easily find HTTP client that are using that lib(means the same semantic) here. Also you can generate client code for other popular HTTP clients in online service or open-source library.

curl -X POST https://httpbin.com/api/post/json \

-H 'Content-Type: application/json' \

-d '{"login":"my_login","password":"my_password"}'

will be turned into

import java.io.IOException;

import okhttp3.OkHttpClient;

import okhttp3.Request;

import okhttp3.Response;

OkHttpClient client = new OkHttpClient();

String requestBody = "{\"login\":\"my_login\",\"password\":\"my_password\"}";

Request request = new Request.Builder()

.url("https://httpbin.com/echo/post/json")

.post(requestBody)

.header("Content-Type", "application/json")

.build();

try (Response response = client.newCall(request).execute()) {

if (!response.isSuccessful()) throw new IOException("Unexpected code " + response);

response.body().string();

}

4. Manipulations with JSON responces

In combination with jq command line tool It’s very fast and handy to work with JSON document in reply payload: prettyprint, fillter, find-replace, etc

# To pretty print

$ curl -s https://httpbin.org/get?show_env=1 | jq '.'

{

"args": {

"show_env": "1"

},

"headers": {

"Accept": "*/*",

"Host": "httpbin.org",

"User-Agent": "curl/8.5.0",

"X-Amzn-Trace-Id": "Root=1-65b39818-14c7e17000ca2b146d699e36",

"X-Forwarded-For": "185.102.11.225",

"X-Forwarded-Port": "443",

"X-Forwarded-Proto": "https"

},

"origin": "185.102.11.225",

"url": "https://httpbin.org/get?show_env=1"

}

# To select nested object value

$ curl -s https://httpbin.org/get?show_env=1 | jq '.headers."User-Agent"'

"curl/8.5.0"

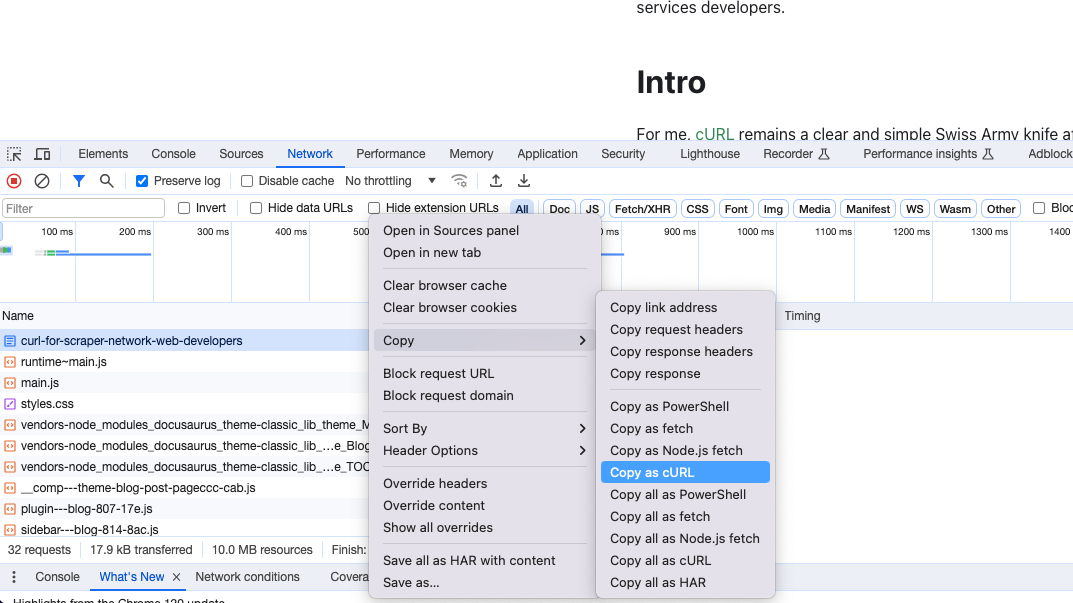

5. Integration with WEB browser DevTools

You can “convert” and copy any of your WEB browser(Chrome, FF, Edge) HTTP requests with all the payload and headers to repeat and play with it later via Dev Tools.

- Open network tab > right click on request you want to copy > Copy > Copy as cURL.

- Past the text in you favorite terminal app.

- Remove unnecessary headers, chookies, params, payload and test that it is still working